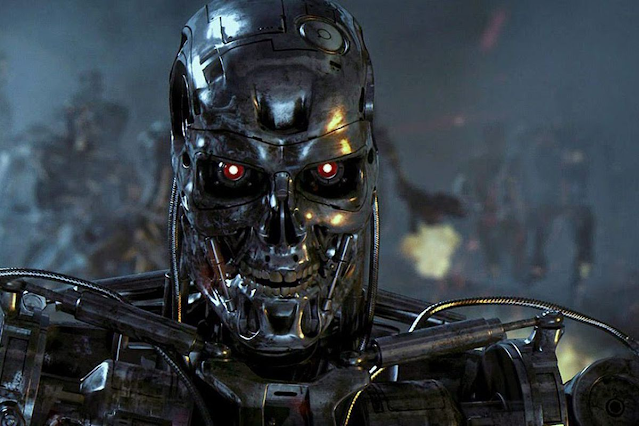

We are on the cusp of AI developing traits or adapting in the same way living organisms do through evolution.

Mothwing patterns, often including structures resembling “owl eyes,” are a prime example of nature’s adaptation to survival. Mothwing eyes are intricate patterns that have evolved over millions of years through a process of natural selection. Initially, moths developed cryptic colouration to blend into their environments, evading predators. Over time, some species developed wing scales with microstructures that reduced light reflection, helping them remain inconspicuous. These structures eventually evolved into complex arrays resembling the texture of eyes to deter predators, a phenomenon called “ eyespot mimicry .” This natural error-creation adaptation likely startled or confused predators, offering those moths an advantage — precious moments to escape. The gradual development of these eye-like patterns underscores the intricate interplay between environmental pressures and biological responses, resulting in the remarkable diversity of moth wing patterns seen today. Critically, moth...