“Possible Minds” 25 ways of looking at AI edited by John Brockman

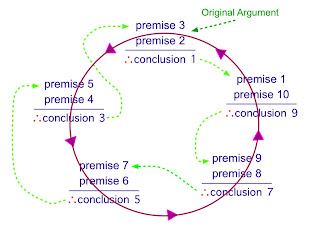

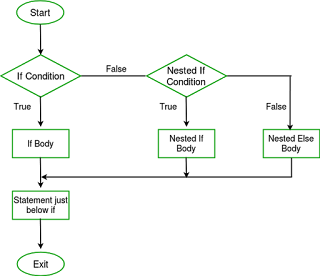

“ Possible Minds ” 25 ways of looking at AI edited by John Brockman One of the best books on AI, right now in 2019, not because of its technical deep views but because it presents many (25) arguments about different aspects of AI and why there cannot be one unified vision or view. It is one to read, just because at the end you have more questions you don’t have answers to; which is a Richard Feynman quote in parts. It does allow you to explore your own views about AI, you will align to different parts of the viewpoints presented, indeed you will create a mashup of them all where you feel comfortable. The take away from the book is the framework and not the content from reading this book, the challenge laid down to keep up with the 25 themes are they develop, morph, link, combine, fraction and fork; especially the ones you find are at conflict with your own view and belief will be the most difficult, and there are lots. Below is for me, some personal, interruption and thinking